CS109 Final Project: Dog Superbreed Classification

Group 31: Daniel Chen, Estefania Lahera, Hailey James, Winnie Wang

1

1

Overview

We chose the dog breed classification problem, which was to classify the dogs by breed or possibly superbreed using the Stanford Dogs Dataset. We attempted 7 models of varying complexity: two baselines, one that predicts the class that makes up a plurality and another that predicts a uniformly random class, logistics regression, a convolutional neural network we built from scratch without data augmentation, the same model with data augmentation, a convolutional neural network built off of MobileNetV2 (a pre-trained model provided by Keras), and finally one built off of ResNet50 (a pre-trained model provided by Keras).

We classified dogs into superbreeds. We think that best model was the Convolutional Neural Network using MobileNetV2 because it had very high accuracy, 79.51% on the test set, only about 3% worse than the model that performed best on the accuracy, and was significantly faster, taking only 20 minutes in comparison to 50 minutes for the model that performed the best.

The motivation, problem statement, results, and conclusion + future work sections are on this page. We summarize the EDA results on this page, but please see the EDA tab for the full EDA report and the Models tab for model descriptions.

Motivation

Dog breed classification is a problem well defined in the project description, but to recap: majority of dogs are often difficult to classify by simply looking, and breed identification is important when rescuing dogs, finding them forever homes, treating them, and various other furry situations. This project classifies purebreds since mixed breeds are, as mentioned, often indistinguishable from purebreds, but the hope is that even classifying a purebred dog or a mixed breed one as one of the breeds it belongs to will help give more information about the dog’s personality, full-grown size, and health. We were motivated by our love of puppies to chose this project!

Problem Statement

Our goal was to classify purebred dogs using training and test data from the Stanford Dogs Dataset into the super-breeds as designated by the American Kennel Society and an additional category for wild dogs, which includes dingos, dholes, and the African hunting dog.

We chose to classify by superbreed due to the limited number of images per breed and the large number of breeds (120).

Data Description and EDA Summary

As specified in the project description, we used the Stanford Dogs Dataset, which had 12,000 training images and about 8580 test image. There were 120 breeds represented, and a roughly equal number of data points of each breed.

We performed EDA on the training set and found a wide range of image sizes, but if we only looked at the 95\% percentile of image and width, most were between 200 and 500 pixels. The mean and median pixels per image were typically around 112, and the color (112, 112, 112) is a uninformative gray, which is unsurprisingly given that the mean and and median were taken across all layers and do not account for the layer combinations.

We also computed aggregate “mean” images which yielded interesting results because we say that most dogs were relatively well centered and say some variety between the different breed classes, especially the wild dog class.

Please see the EDA tab for more details and the images/tables.

Models

See the model tab for detailed descriptions of the process and links to the notebooks.

Summary of Results

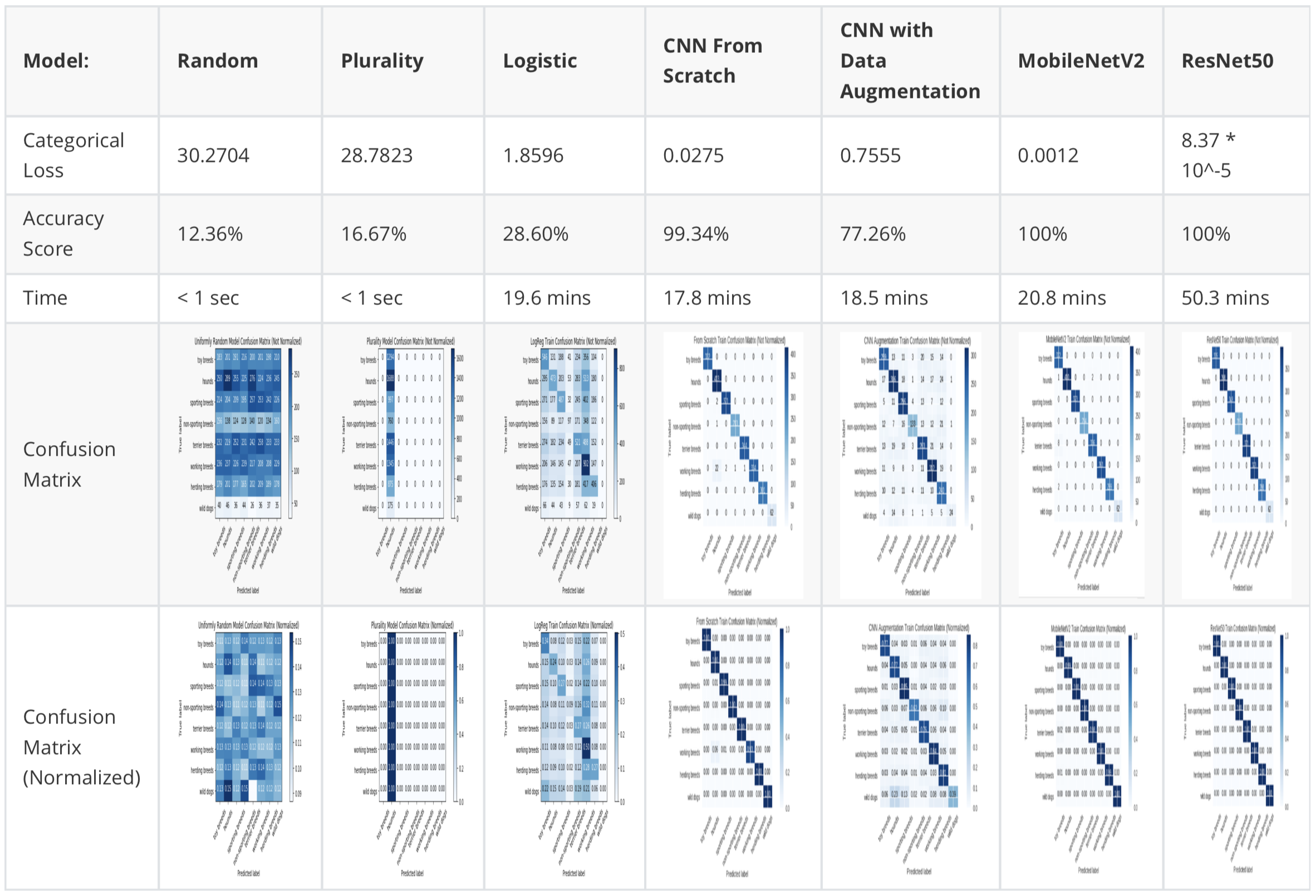

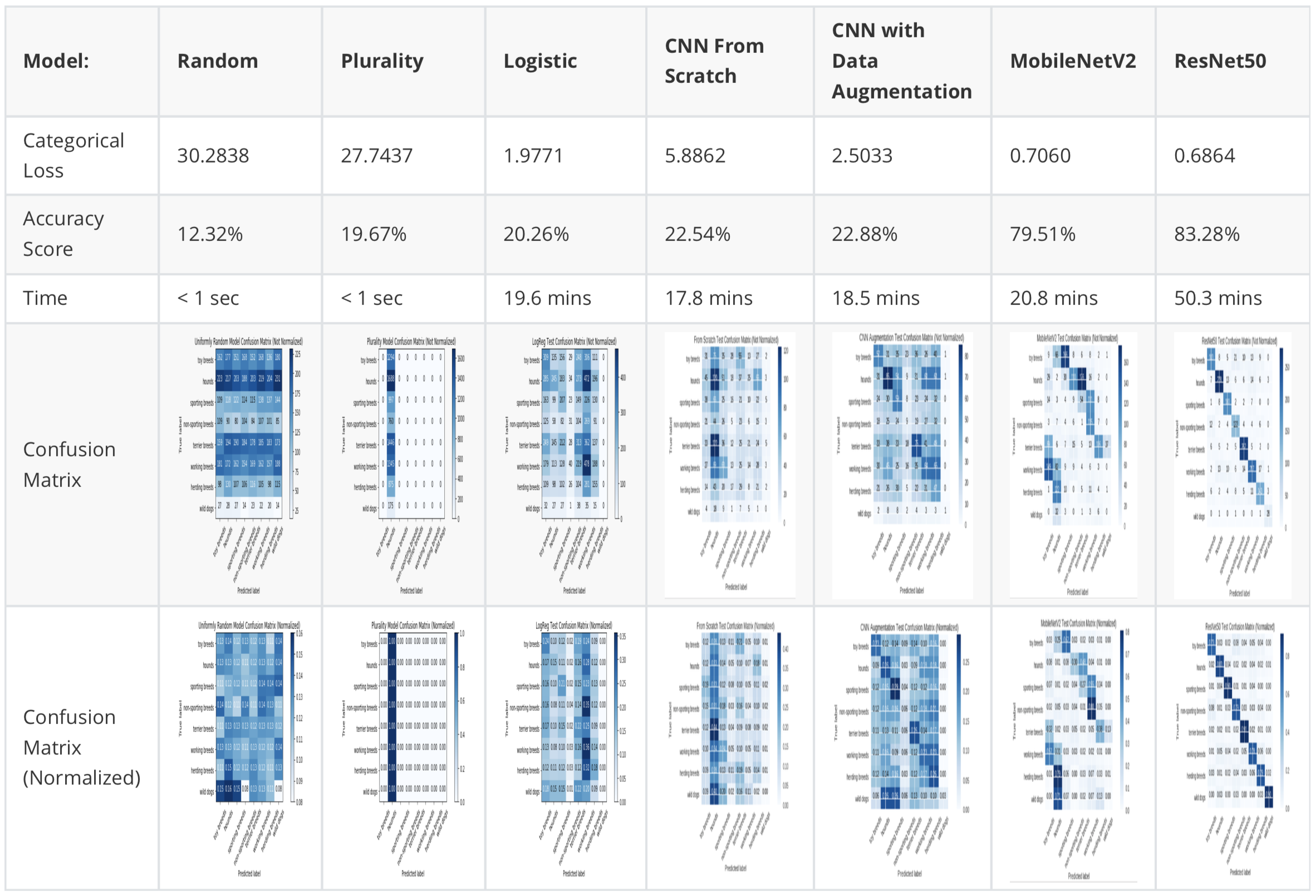

Each model page has the results of that model, but we have put all the main results here in order to compare them more easily.

We think that best model was the Convolutional Neural Network using MobileNetV2 because it had very high accuracy objectively and also relatively: it had 79.51% on the test set, which was only about 3% worse than the model that performed best on the accuracy, ResNet50, and significantly higher than all the other models, none of which performed above 30% accuracy. Moreover, it was also significantly faster than the best performing model, taking only 20 minutes in comparison to 50 minutes for ResNet50, and took about the same amount of time as the other CNNs and the Logistic Regression that it strongly outperformed.

Training Set

Test Set

Conclusion and Future Work

In industry and even academia, developing a CNN is challenging because there is very little established methodology on determining the architecture, and conventional hyper-parameters but again not particularly well understood mechanisms for selecting them.

We were proud of the results we achieved with the pre-trained and “from scratch” CNNs since they improve on the baseline models somewhat substantially, especially the pre-trained models. That said, we were severely hindered because this was our first time working with such a large data set, so it took a while to figure out how to work with the large amount of images. If we had more time, we would definitely experiment with better ways to manage the data, and more importantly, we would investigate different architectures and hyperparameters in order to improve the model.

While we found that we had too little data to classify by each breed individually, it might have been worse to classify superbreeds for our project, since oftentimes breeds in the same superbreed look nothing alike and thus have fewer common features. This is, therefore, another future direction we could explore further, classifying by actual breed and not superbreed and see if we achieve an improvement in accuracy.

1Image source: Google